Data Access and LLMO: Insights from Microsoft Copilot Strategies

- Sebastian Holst

- Jan 9, 2025

- 3 min read

Microsoft Copilot and Copilot for M365 have sparked excitement across organizations looking to boost productivity through AI-driven suggestions. But along with the promise of streamlined workflows comes the need to ensure sensitive data stays protected. A recent LinkedIn Article, 10 Ways to Hide Data for Microsoft Copilot and Copilot for M365, took this topic on describing a variety of methods – from using Microsoft 365 security tools to more content-centric approaches like anonymization and redaction.

It was the “content-centric” approaches that caught my eye as I realized that these were a special case of Large Language Model Optimization (LLMO). And, while none of these particular patterns were unique to LLMO, the implication of the article is that the justification for implementing these controls was for LLMO (or, as written, limiting LLM comprehension).

LLMO for Access Control

Here are two LLMO tactics from the “10 Ways…” article referenced above.

Tagging (metadata)

Sensitivity Labels: Classify documents or emails as “Confidential” or “Highly Confidential,” ensuring they remain inaccessible to Copilot by default. (This is a Copilot-specific tactic that would need to be evaluated when using other LLM-centered platforms)

Content Modification

Redaction and Anonymization: Redacted copies remove sensitive details; masked data and placeholders (e.g., [REDACTED], NAME_PLACEHOLDER, or XXXX-XXXX-XXXX-XXXX) preserve document structure without exposing real information.

LLMO for Access Control: What’s missing?

These tactics effectively shield information from Copilot, but do they go far enough to guide how the model understands the modified and anonymized data?

For example, how can we ensure that “Contoso” as a potential placeholder name for an anonymized customer in some content and a name for a fictional client used in demonstrations and use case narratives are properly excluded from whatever generalizations and connections regarding actual clients that Copilot (or other LLM) is asked to describe?

Optimized LLM Data Access Control

Large Language Model Optimization (LLMO) encompasses all the ways (and reasons) we prepare our data for AI. For access control scenarios, this includes how we label, redact, or substitute information. Effective LLMO for access control must:

Mitigate Risk: (non-negotiable) Ensure access controls are enforced (and verifiable) to prevent accidental leaks or exposures.

While optimizing for (without compromising access control)

Clarity: Ensuring that anything artificial (like a placeholder) is recognized as such, preventing Copilot (and others) from treating pseudo data as real data.

Contextual Accuracy: Delivering relevant insights without mixing fiction with reality.

For example, by marking “Contoso” as a mock company within your data, you reduce the risk that the AI might reference it as if it were a genuine client in other outputs or analytics.

LLMO Access Control Beyond Copilot

One crucial consideration is that some Copilot-specific controls won’t necessarily apply to other LLMs (such as ChatGPT or specialized industry models). If an organization considers these data-protection measures essential, these controls must be paired with the prohibition of any LLM that cannot support those controls.

Conclusion: A Holistic Approach to AI Governance

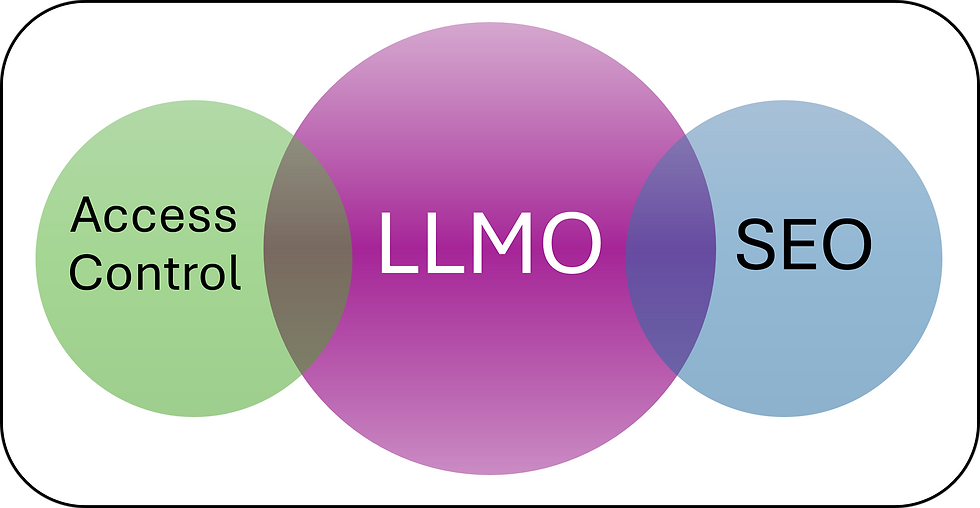

Putting these strategies into practice means recognizing that data access controls and LLMO overlap – in much the same way LLMO could be seen as overlapping with SEO).

Key Takeaways

Some methods for hiding data from Copilot function as LLMO, shaping how AI models understand or handle content.

Be mindful of LLM-specific capabilities.

A combination of traditional security measures and content-focused strategies provides the most robust approach to AI governance.

Comments